ML Safety Scholars

This summer I took part in ML Safety Scholars with online courses from MIT and the University of Michigan as well as a newly designed Introduction to ML Safety. The program introduces students to the fundamentals of deep learning and ML safety. This program run by the Center for AI Safety was designed by Dan Hendrycks. The final project was yet another MNIST classifier but this time with as many safe features as possible. It lasted for about 10 weeks which I mixed with my relocation to Turkey therefore it took me some effort to finish it in time.

What I might have done another way to better finish this program is to deepen my knowledge in mathematics (probability theory, information theory, multivariable calculus) because to understand such things as entropy, various probability distributions, to implement backpropagation, etc. we need a ready-to-use knowledge and while in the middle of battle to finish some task when time runs out it is harder. So it seems it is better to use spaced repetition rather than massed practice (see the Make it stick book by Brown et al.).

This program is like a bootcamp for ML safety field. It won’t teach you how to make a state-of-the-art models but will introduce to the latest concepts in ML safety. The first part on ML wasn’t new for me because I finished the ML intro course before and also did the FastAI course, i.e. first 2 weeks. But then the DL for CV and ML Safety parts were completely new for me. The hardest part perhaps is the last one as those concepts are built on the previous and targets specific areas. The final project was fun to make because it was reiterating most of the material we learnt but with the aim to synthesis, to connect all these methods and techniques together.

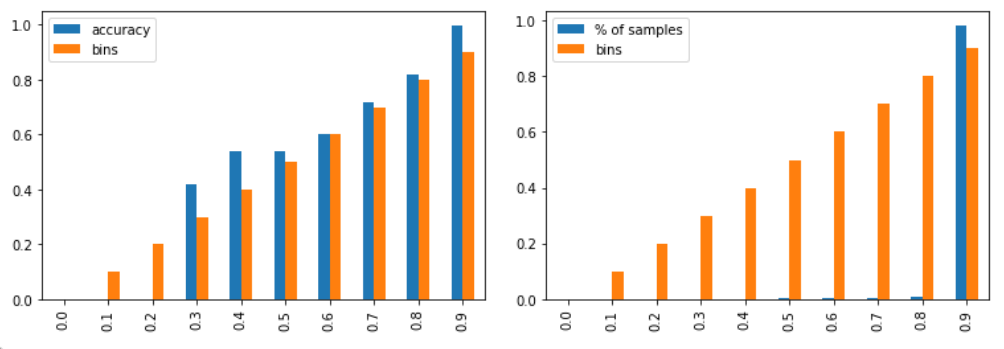

The picture in the header shows the calibration of one of the models we could train.